Bootstrapping k3s with ArgoCD, Cilium, and MetalLB

For a long time I ran a vanilla Kubernetes cluster (kubeadm) as a single-node setup on my HP Elitedesk 800 G2 SFF running Debian. It got the job done, but the hardware always felt under-utilized, and I wanted a more realistic, production-like environment to experiment with high availability, scaling, and needed better resource usage.

Cut to last weekend: I finally installed Proxmox (something Sujeeth has been nudging me to do for ages), and my homelab instantly felt like it had levelled up! VNets, snapshots, easy VM management… I was pumped!

Access Setup

My goal was to replace that lonely single-node with a lightweight, multi-node k3s cluster. Fun fact: In a K3s cluster, you won’t spot separate pods for the API server, kube-proxy, or kube-controller-manager in kube-system. Why? Because k3s packs them all into a single tiny binary!

My setup consisted of:

- one control plane node

- two worker nodes

All three VMs lived inside an isolated Proxmox VNet, so there was no direct way to reach them, and I really didn’t feel like dealing with port forwarding or performing unnecessary wireguard gymnastics. So I did what any sleep-deprived, sane person would do at 2.30 AM — I installed Tailscale on the control plane. From there, I could hop into the worker nodes via SSH whenever I needed. That extra access was purely for convenience during setup, because, honestly, I’ll take SSH over clunky VNC any day.

k3s installation (1 server, 2 agents)

k3s ships with Traefik by default, but I’m more comfortable managing nginx, and since I plan to move toward Gateway API later, it didn’t make sense to invest effort in a setup I’d eventually replace.

I’d worked with Calico before and found it solid, but I always wanted to try Cilium because of its eBPF-powered approach to networking and security. This was a good time to make the switch.

curl -sfL https://get.k3s.io | INSTALL_K3S_EXEC='--flannel-backend=none --disable=traefik --disable-network-policy' sh -

The k3s install was pretty much straightforward, albeit not without its own quirks. At first, the agents couldn’t resolve K3S_URL https://controlplane:6443 during bootstrap, so I fell back to the node’s IP:

# didn’t work

curl -sfL https://get.k3s.io | K3S_URL=https://controlplane:6443 K3S_TOKEN=mynodetoken sh -

# worked

curl -sfL https://get.k3s.io | K3S_URL=https://192.168.1.10:6443 K3S_TOKEN=mynodetoken sh -

Once that clicked, the cluster was alive! Well almost.

Cilium for Networking Fun

Installing the cilium CLI was painless: I grabbed the latest version, verified the checksums, dropped the binary into /usr/local/bin, and got rolling:

CILIUM_CLI_VERSION=$(curl -s https://raw.githubusercontent.com/cilium/cilium-cli/main/stable.txt)

CLI_ARCH=amd64

if [ "$(uname -m)" = "aarch64" ]; then CLI_ARCH=arm64; fi

curl -L --fail --remote-name-all https://github.com/cilium/cilium-cli/releases/download/${CILIUM_CLI_VERSION}/cilium-linux-${CLI_ARCH}.tar.gz{,.sha256sum}

sha256sum --check cilium-linux-${CLI_ARCH}.tar.gz.sha256sum

sudo tar xzvfC cilium-linux-${CLI_ARCH}.tar.gz /usr/local/bin

rm cilium-linux-${CLI_ARCH}.tar.gz{,.sha256sum}

cilium status --wait

cilium install --version 1.18.1 --set=ipam.operator.clusterPoolIPv4PodCIDRList="10.42.0.0/16"

Seeing cilium status finally go green was a rush, pod networking was setup!

ArgoCD & GitOps Bliss

ArgoCD setup was smooth. I hooked it to my GitOps repo and went with an App of Apps pattern.

.

├── apps

│ ├── Chart.yaml

│ ├── templates

│ │ └── applications.yaml

│ └── values.yaml

├── cert-manager

│ └── clusterissuer.yml

├── clip

│ └── clip.yaml

├── external-dns

│ └── externaldns.yaml

├── metallb

│ └── config.yaml

├── vaultwarden

│ ├── Chart.yaml

│ ├── templates

│ │ ├── deployment.yaml

│ │ ├── _helpers.tpl

│ │ ├── ingress.yaml

│ │ ├── NOTES.txt

│ │ ├── pvc.yaml

│ │ ├── pv.yaml

│ │ ├── service.yaml

│ │ └── tests

│ │ └── test-connection.yaml

│ └── values.yaml

└── ytdlp-webui

└── ytdlp-webui.yaml

The cool part? Most of my apps were Helm charts from public repos. That meant I didn’t have to manually create tons of directories — almost everything was handled through the root values.yaml in my main app. Super tidy and easy to manage!

Here’s what the applications.yaml looked like:

{{- range .Values.applications }}

{{- $config := $.Values.config -}}

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: {{ .name | quote }}

namespace: argocd

spec:

destination:

namespace: {{ .namespace | default .name | quote }}

server: {{ $config.spec.destination.server | quote }}

project: default

source:

chart: {{ .chart }}

path: {{ .path | quote }}

repoURL: {{ .repoURL }}

targetRevision: {{ .targetRevision }}

{{- with .tool }}

{{- . | toYaml | nindent 4 }}

{{- end }}

syncPolicy:

syncOptions:

- CreateNamespace=true

automated:

prune: true

selfHeal: true

---

{{ end -}}

and here’s a snippet of the values.yaml:

config:

spec:

destination:

server: https://kubernetes.default.svc

source:

targetRevision: master

applications:

- name: metallb

repoURL: https://metallb.github.io/metallb

chart: metallb

namespace: metallb-system

targetRevision: 0.15.2

tool:

helm:

releaseName: metallb

- name: cert-manager

[..SNIP..]

I connected my Git repo in ArgoCD, spun up the root app, enabled auto-sync, and then just sat back as the apps rolled themselves out. Watching everything come to life automatically was immensely satisfying.

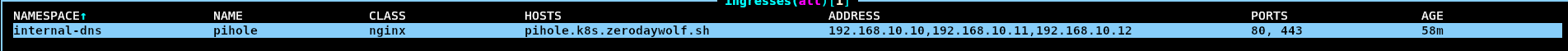

Ingress & Load Balancing Magic

Without a cloud provider, Kubernetes marks Service.status.loadBalancer.ingress with every node IP — messy.

This is normal on bare-metal Kubernetes: with no cloud load balancer, the service reports every node as a potential entry point. But it confused my DNS automation (ExternalDNS+Pihole). I needed something better.

Enter MetalLB. Following the guide, I configured Layer-2 mode (docs). Suddenly, Services of type LoadBalancer got a single, clean IP. Goodbye multi-IP chaos!

Edit: A few days after sharing this blog, a Linkedin user pointed out that I could use Cilium instead of MetalLB as the load balancer. Turns out MetalLB was used by Cilium in it’s own CI/CD pipelines before they decided to have less dependencies on other components and rolled out their own native Loadbalancer IPAM solution within Cilium in v1.13. Hence, I was able to replace it with the following IP pool:

apiVersion: "cilium.io/v2"

kind: CiliumLoadBalancerIPPool

metadata:

name: "pool"

spec:

blocks:

- cidr: "100.x.x.y/32"

Tailnet Integration

Since I access the services over Tailscale, I installed the Tailscale operator to bring the cluster onto my Tailnet. After that, it was mostly housekeeping:

- Expose the two critical services on my Tailnet: DNS and the load balancer

- Configure split-DNS for my domain and point the tailnet’s nameservers to Pi-hole, so tailnet clients resolve internal services cleanly without ever touching my LAN’s resolver.

- Configure metallb to hand out its Tailscale IP to the LoadBalancer service (ingress-nginx)

apiVersion: metallb.io/v1beta1

kind: IPAddressPool

metadata:

name: default

namespace: metallb-system

spec:

addresses:

- 100.x.x.y-100.x.x.y

autoAssign: true

---

apiVersion: metallb.io/v1beta1

kind: L2Advertisement

metadata:

name: default

namespace: metallb-system

spec:

ipAddressPools:

- default

With everything in place, I synced all the applications — and that was it. The cluster services I needed were instantly reachable from any client in my tailnet.

Wrapping It Up

Going from single-node kubeadm to multi-node K3s has been an absolute blast. My homelab finally feels alive, and pushing updates is so much easier now with GitOps keeping everything in sync automatically. MetalLB plus Tailscale makes the cluster accessible without ugly reverse proxies, and Cilium lets me tinker with the wild powers of eBPF!